In this article, I’m going to cover various exploits that can work against the Django templating engine in a modern web application.

I’ll show how and why they work, as exploits, the risks of leaving them unfixed. Then, I’ll show you how to find them using a fuzzer I’ve been developing.

A quick introduction to Cross-Site-Scripting¶

Cross-Site-Scripting, or XSS, is the technique of exploiting web applications to cause trick users’ browsers to executing arbitrary (and malicious) JavaScript.

The malicious JavaScript code would be targeted to accomplish something like:

- Changing users passwords without their knowledge

- Data gathering

- Executing arbitrary actions

This exploit works based on the assumption that the HTML and JavaScript served from a website’s server is “trusted”.

If you visit your favourite website, you’d expect that the HTML and JavaScript served to you and rendered in your browser is “safe”. Because of the mix between User-Generated-Content (UGC) and static content in most modern applications,

Some common examples of content which is rendered as part of the site DOM from a database would be:

- A comments section at the bottom of the page

- Names or profile pictures of users

Instead of putting in their name as “Jim Smith”

Article posted by {{ user }}.

This is fine if user = "Jim Smith", but if Jim is a bit more callous, then he could enter his name as <img src="https://media.giphy.com/media/I3eVhMpz8hns4/giphy.gif"/>.

Anyone viewing this page would then see the GIF that Jim injected into his username:

Article posted by <img src="https://media.giphy.com/media/I3eVhMpz8hns4/giphy.gif"/>

This is an example of Stored/Permanent XSS. There are 2 groups of XSS exploits, which we’ll explore:

- Reflected XSS

- Stored/Permanent XSS

Reflected XSS¶

Reflected XSS is common in both server-side and client-side applications. A common XSS flaw in applications that attackers will seek out is a search page. Take this (very) simple example of a HTML + JavaScript page for showing a form asking for a search query and showing what the user searched for if the GET parameters is existent.

<html lang="en-AU">

<body>

<form method="get">

<label for="query">Search: </label>

<input type="text" id="query" name="query" />

<input type="submit" value="Search" />

</form>

<span id="search-query" ></span>

<!-- The results of the search query -->

<script type="application/javascript">

var searchParams = new URLSearchParams(document.location.search);

if (searchParams.has("query"))

{

document.getElementById("search-query").innerHTML = "You searched for " + searchParams.get("query")

}

</script>

</body>

</html>

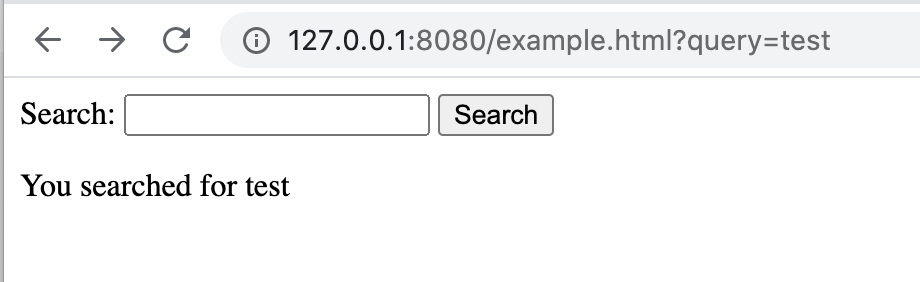

Your regular user would search a text string which is shown on the page:

An attacker could search for any valid HTML and it would become part of the DOM:

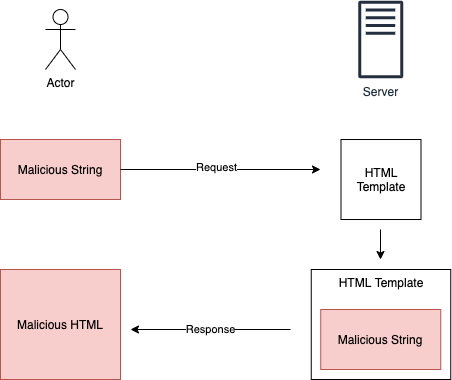

In the reflective XSS model, the malicious payload is sent back to the attacker. This type of attack is less-dangerous than permanent XSS.

Reflective XSS attacks are used by attackers sending a link and tricking a user to show the reflected payload to accomplish the hack.

Stored/Permanent XSS¶

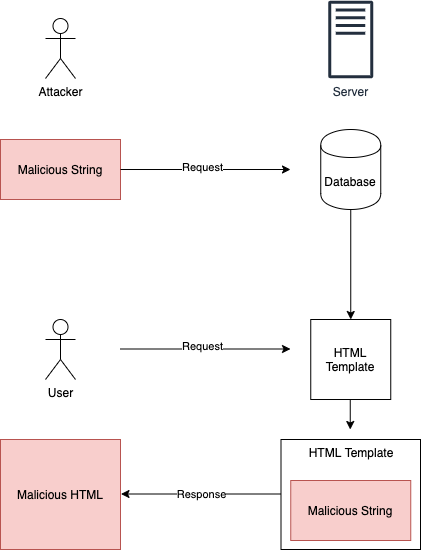

Permanent XSS attacks are far more dangerous to an application because the attack has the opporunity to impact all of the users of the site, including the site administrators.

The attack mechanism is the same as reflective, but the server-side application stores the malicious payload in a database, or a file of some sort:

Some really-basic examples I shared were user names, comments, and profile photos.

Permanent XSS attacks are often used for

- Sending a “beacon” to the attacker with some details about the target, such as their IP address, user name, browser, OS, password, etc.

- Making POST requests to parts of the application which let the attacker take over an account by changing the password.

- Combining with SQL Injection vulnerabilities to exfiltrate data from the database.

Django fights back¶

Django assumes that all context data is “unsafe” unless otherwise specified.

This means that most forms of XSS attack don’t work with Django templates.

For example, if you wrote the following template:

<html lang="en-AU">

<body>

<form method="get">

<label for="query">Search: </label>

<input type="text" id="query" name="query" />

<input type="submit" value="Search" />

</form>

<span id="search-query" >You searched for {{ query }}</span>

</body>

</html>

Django would automatically escape the malicious string in the query context variable:

<img src="https://media.giphy.com/media/I3eVhMpz8hns4/giphy.gif"/>

To:

<img src="https://media.giphy.com/media/I3eVhMpz8hns4/giphy.gif"/>

It does this by passing all string data through Python’s html.escape() function.

This function will:

- Replace any

&with an&ampersand HTML character-reference - Replace any

<or>with an<or>HTML character-reference - Replace any

"with an escaped\" - Replace any

'with an escaped\'

To say that this escaping of these 5 characters “protects” your application is a gross-underestimation of XSS.

Caveats to Django XSS protection¶

There are many caveats to Django XSS protection, and as you learn more about XSS you’ll be able to spot them in your templates.

1. It only applies to the Django template engine¶

The XSS protection for Django is part of the Django templating engine. If your application heavily uses a client-side JavaScript frame (such as Angular, Vue, React) then the Django XSS protection is not helping you.

An example of a reflective XSS vulnerability is a third-party JavaScript component on your page. Many third-party JavaScript components, such as type-ahead widgets have XSS flaws.

Furthermore, many Django extensions that rely on combined JavaScript/Python logic have XSS flaws. These include many form extensions like Date/Time selectors. Some of these flaws have been discovered and fixed, but many go unnoticed.

What can you do about this?

- Check your JavaScript and Python dependencies against a CVE database like Snyk.io

- Test the whole application (see later in this article) with malicous payloads

2. It does not protect against the misuse of “Safe Strings”¶

The Django XSS protection can be disabled when a string is marked as “safe”, either in the View logic by using the mark_safe() function, or in the template using the safe filter.

format_html("{} <b>{}</b> {}",

mark_safe(some_html),

some_text,

some_other_text,

)

Alternatively, in the template you can use the safe filter to disable the HTML escape protection:

<span id="search-query" >You searched for {{ query | safe }}</span>

This may seem obvious that by explicitly disabling XSS protection that it doesn’t protect you against XSS, but “Use mark_safe()“ is an accepted solution to a worrying number of Django questions

on StackOverflow.com.

What can you do about this?

- Search your code base for use of the

safefilter - Search your code base for the use of

mark_safefunction by using [my PyCharm extension] - Use bleach to clean out any potentially vulnerable attributes

Here is an example of a filter tag that I wrote to convert using Bleach to remove any potentially harmful data:

from django import template

from django.utils.safestring import mark_safe

import bleach

register = template.Library()

_ALLOWED_ATTRIBUTES = {

'a': ['href', 'title'],

'img': ['src', 'class'],

'table': ['class']

}

_ALLOWED_TAGS = ['b', 'i', 'ul', 'li', 'p', 'br', 'a', 'h1', 'h2', 'h3', 'h4', 'ol', 'img', 'strong', 'code', 'em', 'blockquote',

'table', 'thead', 'tr', 'td', 'tbody', 'th']

@register.filter()

def safer(text):

return mark_safe(bleach.clean(text, tags=_ALLOWED_TAGS, attributes=_ALLOWED_ATTRIBUTES))

You’d use this tag as a drop-in replacement for safe:

<span id="search-query" >You searched for {{ query | safer }}</span>

3. Some types of attribute escape are not protected¶

Many XSS techniques use HTML/JavaScript event handlers as attributes of existing tags instead of new <script> tags.

For example, many HTML elements support an onload event. The HTML can have any JavaScript action as a trigger and it doesn’t require any user interaction:

<img src=/img/home-bg.jpg onload=alert(1)>

If your Django template had the src attribute replaced with a context variable:

<img src={{ profile_photo }}>

The attacker would set their profile photo URL to "/img/home-bg.jpg onload=alert(1)" to accomplish this attack.

Because you didn’t encapsulate the src attribute in quotes, the Django XSS protection won’t escape the input.

What can you do about this?

- Always quote your attributes if they come from user-generated/untrusted sources

4. It does not protect against other attributes which accept JavaScript¶

Let’s say you let your users add a link to their personal website that shows up on their profile page inside your application.

<a href="{{ user.profile_url }}">Website</a>

What could go wrong?

Well, the attacker can just put "javascript:do_stuff(xxvb)" as the link to their website, so if the user clicks on the link, it executes whatever JavaScript the attacker wishes.

You could check for user.profile_url.startswith("javascript:"), however the following are also valid in most browsers:

<a href="JaVaScript:alert(1)">XSS</a>(the protocol is case insensitive)<a href=" javascript:alert(1)">XSS</a>(the characters \x01-\x20 are allowed before the protocol)<a href="javas cript:alert(1)">XSS</a>(the characters \x09,\x0a,\x0d are allowed inside the protocol)<a href="javascript \x09:alert(1)">XSS</a>(the characters \x09,\x0a,\x0d are allowed after protocol name before the colon)

What can you do about this?

- Sanitize links before they’re stored in the database, or

- Don’t offer this behaviour on the application.

5. html.escape() does not escape JavaScript template strings¶

The escape function that Django XSS protection uses will escape the string quotes ' and ".

html.escape() does not escape the backtick ``` used in ECMAScript Template literals. Template literals are part of the ES2015 specification (supported in any browser except IE).

This is valid ES6:

let name = `Ryan`;

console.log(`Hi my name is ${name}`); // Hi my name is Ryan

6. It does not protect against the data protocol base64 encoded strings¶

<iframe src="{{ company_website }}"></script>

You can encode any HTML string to get around the escape() filter used base 64. If you mark the URL using the data protocol and base64 encoding, the browser will decode the string and load the HTML.

<script>alert('hi');</script> in base 64 is PHNjcmlwdD5hbGVydCgnaGknKTs8L3NjcmlwdD4= to the data protocol string is:

data:text/html;base64,PHNjcmlwdD5hbGVydCgnaGknKTs8L3NjcmlwdD4=

This doesn’t contain any of the “unsafe” entities that html.escape() looks for.

7. It does not protect against unquoted JavaScript injections¶

Let’s say that you have a Spinner widget that lets the user select their price range for buying a car.

They can enter their price range as $5000 - $10000 dollars and you set the minimum and maximum values for this widget inside your template.

Because they are JavaScript integers, you don’t use quotes:

<script type=text/javascript src="ext/spinner.js"></script>

<script type=text/javascript>

var spinner = new SpinnerWidget();

spinner.minimum = {{ minimum_price }} ;

spinner.maximum = {{ maximum_price }} ;

spinner.show();

</script>

In this example, minimum_price can be 0 ; malicious_code() and it will inject the JS directly into the rendered page.

Again, the XSS protection in Django will not protect you here, because it doesn’t contain any quotes or HTML entities.

What can you do about this?

- Move this logic into an API, or

- Validate the input

XSS Fuzzing to detect permanent and reflective vulnerabilities¶

Because there are so many potential caveats to Django XSS protection, I decided to write a testing utility for detecting possible vulnerabilities in templates.

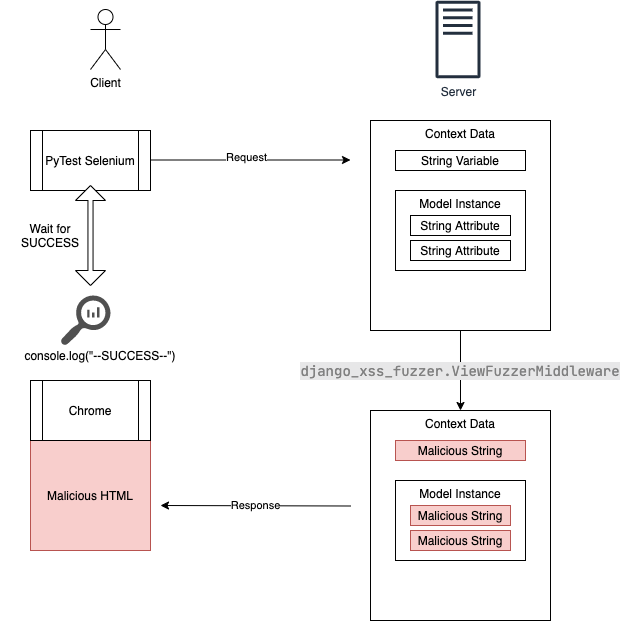

The way it works is as a piece of Django Middleware that you use whilst testing which replaces any string-like value in the context data with a malicous string.

So far, I’ve added the following test strings. Each one will try and call console.log('--SUCCESS[field]--') to write to the JavaScript console log to prove the flaw.

DEFAULT_PATTERNS = (

XssPattern('<script>throw onerror=eval,\'=console.log\x28\\\'{0}\\\'\x29\'</script>', "Script tag with onerror event"),

XssPattern('x onafterscriptexecute="console.log(\'{0}\')"', "non-quoted attribute escape"),

XssPattern('x onafterscriptexecute="console.log(`{0}`)"', "non-quoted attribute escape with backticks"),

XssPattern('<script>console.log(`{0}`)</script>', "template strings"),

XssPattern('x onafterprint="console.log(\'{0}\')"', "non-quoted attribute escape on load"),

XssPattern('x onerror="console.log(\'{0}\')"', "non-quoted attribute escape on load"),

XssPattern('x onafterprint="console.log(`{0}`)"', "non-quoted attribute escape on load with backticks"),

XssPattern('+ADw-script+AD4-console.log(+ACc-{0}+ACc-)+ADw-/script+AD4-', "UTF-7 charset meta"),

XssPattern('data:text/javascript;base64,Y29uc29sZS5sb2coJy0tU1VDQ0VTU1tdLS0nKQ==', "JS-encoded base64, payload is '--SUCCESS[]--'")

)

The middleware is very aggressive, so don’t deploy this anywhere other than a testing environment!

To install via pip

$ pip install django-xss-fuzzer

Add ViewFuzzerMiddleware to your middleware list for a test environment.

MIDDLEWARE = [

...

'django_xss_fuzzer.ViewFuzzerMiddleware'

]

Once you’ve restarted Django, it will replace anything “string-like” in the context data with a malicious string.

By default it will try <script>throw onerror=eval,\'=console.log\x28\\\'{0}\\\'\x29\'</script>.

The values that will be replaced : - Any string variables - Any attributes in a model instance that are strings - Any attributes in a QuerySet containing data models that are strings

When you browse any of the pages on your site, you should see Django successfully protecting and escaping the strings.

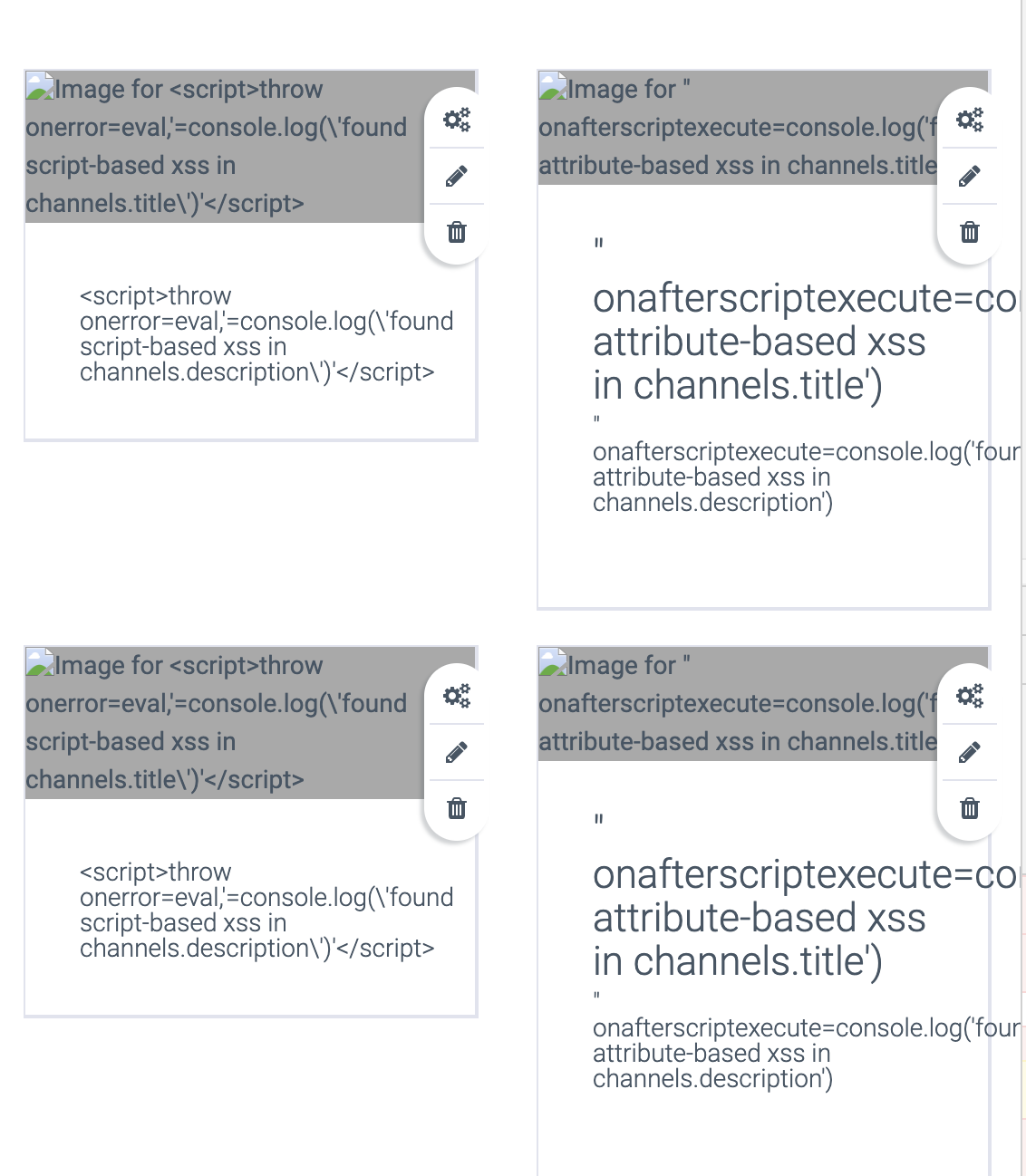

When you open the JavaScript console, if you see any --SUCCESS[]-- messages this means your page is vulnerable, the name of the field that it replaced will be inside square brackets.

To change the malicious string, set the XSS_PATTERN variable in your Django settings.

It’s designed to be paired with PyTest, PyTest-Django, and Selenium so that it will try a range of malicious strings until it finds a successful attack vector.

The selenium integration is required so that each view will be rendered and then processed by Chrome. Once Chrome has loaded the page, the tool will inspect the JavaScript log for any occurences of --SUCCESS[field]--

and then fail the test if one is found.

Here is an example test for the URLs / and /home:

import pytest

paths = (

'/',

'/home'

)

@pytest.mark.django_db()

@pytest.mark.parametrize('path', paths)

def test_xss_patterns(selenium, live_server, settings, xss_pattern, path):

setattr(settings, 'XSS_PATTERN', xss_pattern.string)

selenium.get('%s%s' % (live_server.url, path))

assert not xss_pattern.succeeded(selenium), xss_pattern.message

The test function test_xss_patterns is a parametrized test that will run a live server using pytest-django and open a browser for each test using pytest-selenium.

To test more views, just add the URIs to paths.

To setup selenium, add the following to your conftest.py:

import pytest

@pytest.fixture(scope='session')

def session_capabilities(session_capabilities):

session_capabilities['goog:loggingPrefs'] = {'browser': 'ALL'}

return session_capabilities

@pytest.fixture

def chrome_options(chrome_options):

chrome_options.headless = True

return chrome_options

This will configure Chrome as headless and enable logging to capture the XSS flaws.

To run PyTest with this plugin, use the --driver flag as Chrome and --driver-path to point to a downloaded version of the Chrome Driver for the version of Chrome you have installed.

$ python -m pytest tests/ --driver Chrome --driver-path ~/Downloads/chromedriver83 -rs -vv

Once this is running, you’ll see something similar to the following output:

For each failed test, inspect that particular view with the attack string and see where the potential vulnerability is.